About

My name is Taiki Yamamoto and I am a sophomore at the College of Engineering of the University of California, Berkeley (Graduating B.S. May 2026). I am pursuing a Mechanical Engineering major with an Electrical Engineering/Computer Science minor. Before I came to UC Berkeley, I attended Palo Alto High School where I was captain of the FRC robotics team.

At UC Berkeley, as an engineer on the Accumulator subteam of Formula Electric @ Berkeley (our FSAE EV team), I design and manufacture the high-voltage battery of the car. More specifically, I design the battery segments themselves in order to improve manufacturing, serviceability, and size in comparison to the previous year's car. Making a battery segment that accounts for these three traits is a difficult task which has many design decisions that affect the design of the rest of the car. Some skills I use are Solidworks, FEA, Rapid prototyping, and DFM

To view my FSAE projects click here.

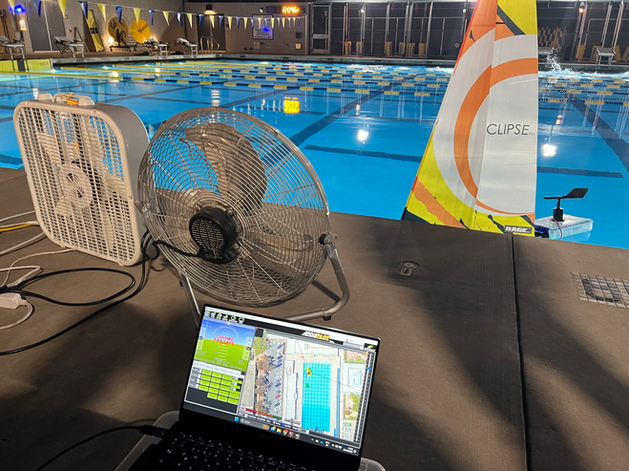

In addition, I have previously led a research project in the Theoretical and Applied Fluid Dynamics Lab mentored by Professor Reza Alam. Here I have been developing a swarm of autonomous controlled sailboat drones. My role has been in the hardware design and manufacturing as well as the control system for an unmanned sailboat.

To view my TAFLab projects click here.

In my freshman year, I was heavily involved in the Solar Car Challenge competition. I worked on various subsystems of the car, but I am most proud of my work on the mechanical design of the suspensions.

To view my CalSol projects click here.

During my high school years, I was the Team Captain in my senior year of the First Robotics Competition team. As captain, I took on all team operations for administration, technical sub-teams, and logistics. During the robot building process, I was involved in design reviews, facilitating part manufacturing, and robot assembly.

To view my FRC projects click here.

In my free time, I also enjoy making personal projects in order to improve on a skill or explore a new concept. To view my autonomous web camera project click here.

Skills

Manufacturing

-

3D Printer

-

Manuel Mill

-

Lathe

-

Laser Cutter

-

Metal Bending

-

Welding

-

CNC Router

CAD

-

Solidworks

-

HSMWorks

-

Fusion 360

Software Engineering

-

Python

-

Java

-

Raspberry Pi

Design Engineering

-

Rapid Prototyping

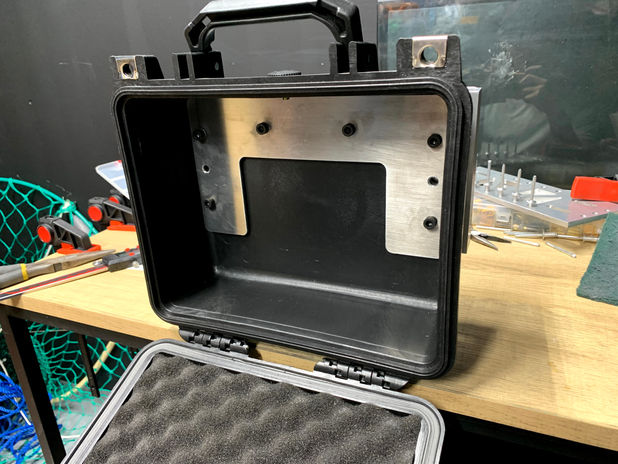

Formula Electric@Berkeley

Accumulator Member

Fall 2023 - Present

-

Designed HV busbar routing to meet creepage and clearance requirements.

-

Manufactured all 150+ busbars with Omax waterjet and minimized the material usage by 40%.

-

Produced GD&T manufacturing drawings for battery segment structural members.

-

Designed accumulator casing prototype to improve manufacturing efficiency and tolerances.

Theoretical Applied Fluid Dynamics Lab

Research Lead

Spring 2023 - Winter 2023

-

Developed the sail and rudder controls system with ArduPilot for multiple autonomous sailboats.

-

Designed rigid sail using NACA 0018 airfoil with internal tubing on Fusion 360.

-

Designed hull with a prismatic coefficient of 0.54 and midsection coefficient of 0.8 on Fusion 360.

CalSol

Suspensions Subteam Member

September 2022 - March 2023

-

Performed Solidworks FEA on new bracket designs with up to 3 factors of safety.

-

Designed suspension shock brackets for new ground clearance regulations and integration.

-

Performed layups for the floor of carbon fiber chassis.

FRC Team 8

Team Captain

July 2021 - May 2022

-

Tested the first fully assembled iteration for flaws in the ball going from intake to shooter mechanism

-

Led assembly of ball singulator and intake mechanisms.

-

Made design decisions for mechanisms with machining capabilities in mind.

-

Oversaw technical, administrative, and logistical processes for a team of 80+ members.

Build Subteam Captain

August 2020 - July 2021

-

Fixed drivetrain chain, singulator belts, and intake chain length of the robot for competing online.

-

Returning to the lab to teach the freshmen all of the machines while following Covid protocols.

-

Redesigned the structure of the build recruitment into an online setting close to the original.

Lab Manager

August 2019 - July 2020

-

Led the prototyping of the intake which finished within the first week of the build season.

-

Assembled drivetrain and second iteration of the ball singulator mechanisms.

-

Designed, fabricated, and assembled a new metal robot cart due to the old one being clunky.

-

Created a simple and sustainable system of lathe tooling by establishing a website for future purchases.

Autonomous Face Tracking Camera

My Objective

In the winter of 2021 with two other friends, I came up with the idea to make this autonomous tracking web camera for making my younger brother's online physical and occupational therapy sessions much easier. Since he had to move around during his therapy sessions this meant that he would go out of frame quite often which made it difficult and inefficient for the therapist. Furthermore, I found that most products like this in the market then were not very affordable and user-friendly for those with disabilities or younger kids. Initially, the purpose was to make this for testing with just my younger brother but later on, we worked with a local therapist from AbilityPath (special needs community advocate company in the Bay Area) to have them test out our prototype.

The technical specs we wanted to achieve were that the webcam would be completely stand-alone and would not require the user to download or install any software onto their device (just plug in and use).

Technical Details

My work began with researching the electronic hardware compatibility between cameras and microcontrollers that would fit our stand-alone design. After understanding that an Arduino has to use a computer to run the software, I made the decision to go with a

Raspberry Pi which can run all of the software. I researched the possibility of using only one camera to function as both the video that the device shows on the video call and the video that the face tracking software uses. With regular web cameras, it did not seem that there would be a simple solution to tackling both functions and thus we went with two cameras. In the end, the general flow of how the webcam would work was that one camera's video feed is taken in which the face tracking algorithm looks for a face. If there is a face that is outside of the innermost bounding box (set by us) of the camera's frame then the 2-axis gimbal servo system will pan and tilt to make the face go into the bounding box. If there is no face detected at all then the gimbal will do a roaming function to move until a face is detected and then the facial detection function will pan and tilt until the face is inside the bounding box.

I worked with one of my friends on the software that integrates the face-tracking algorithm, vector calculation, and servo control into the Raspberry Pi to be completely stand-alone. First, I had to figure out which trained facial detection would work in our case. I started off by trying to get the OpenCV haar cascade face tracker to work on images and then a video feed. By testing multiple lighting conditions, movements (jumping, running, walking, and going out of frame), and distances from the camera, we noticed that the haar cascade had detection issues and took too much processing power for the Raspberry Pi to allow for real-time tracking. We needed to find a tracker optimized for multiple lighting conditions and less processing power. After testing 4 new trackers, the quickest and farthest in distance was the MTCNN tracker. Once the face tracking algorithm was established, we figured out the vector calculations that would translate the face to bounding box distance into servo movement. In order to get the fully integrated prototype running, I wrote the code that would put together all of the functions.

Once the code was written and worked with testing on a regular desktop computer processor, it was time to make the Raspberry Pi execute everything on its own. I looked into the Raspberry Pi pin layout and wired all the servo motors and camera input. With all of the hardware assembled together, I was able to test the code to make sure that there were no failure cases. One issue we came across was that the servo motors were not stepping quickly enough for movements that were quick because the vector calculation had it so that the gimbal system would move to where it detected the face. In order to fix the code, I made the bounding box a little larger and the vector calculations would happen less frequently to both lower the processing power required and make more efficient movements.

Results/Takeaways

The completely standalone prototype was made with the user in mind. This meant that the web camera only had to be plugged into the power outlet and the device that the user is using for their video call. When my younger brother and the therapist used the camera in their own respective therapy sessions the camera was able to keep up with most movements and had no issues with it stopping all of a sudden. However, some takeaways that I would want to improve on would include the tracking ability of distances greater than 3 meters, the limitation of the tracking algorithm, and the need for a separate number pad keyboard. In order to improve the tracking radius I would have to train my own face-tracking algorithm with machine learning. In addition, the issue of only being able to track the face was known early on and we accepted it as something we would work on with further prototypes. By integrating a combination of body part trackers this could be achieved. Furthermore, we attached a number pad keyboard to the Raspberry Pi because this would allow the user to start the tracking whenever they wished and otherwise for it to function as a normal webcam with manual control for the angle of the camera. However, this could have been substituted with buttons that would be on the main body of the webcam. This project taught me a great amount of technical coding knowledge and about microcontrollers as well as interacting with a user which I find very interesting.

If you are interested, here is the GitHub repository of the auto face tracking webcam code.

Electric Bike

My Objective

In the summer of 2019, I had the idea of converting the bike I use to commute to school every day into a chain-driven electric bike. The two main reasons that drove me to pursue this project were that I wanted to commute to school very quickly and since this was my first major big project, to expand my knowledge of CAD, CAM, and electrical engineering. Some design constraints that I made for myself included maintaining the gear shifting capability to allow for riding uphills with ease and maintaining the center of mass as close to the original.

Technical Details

I started off with the CAD for the motor mounts which needed to be accurate to the shape of the frame. In order to do so I took a picture of the profile of the frame and measured the dimensions of the lengths in order to model the frame to scale in Solidworks. With the frame modeled, I was able to now design the 4 mounting plates that would hold the 48V 1600W hub motor which drives the whole bike. After designing the mounting plates, I used a laser cutter to cut out wooden versions of the side plates to make sure that my geometry of the frame and mounting holes were accurate to the actual bike. Since the plates had the correct geometry, I machined all of the parts using the CNC router, manual mill, and deburring tool.

Next, I had to change out some of the existing parts on the bike in order to make the e-bike conversion work. One of the modifications was replacing the main sprocket with a dual sprocket stack and freewheel. This dual sprocket and freewheel combination allowed for the original chain to run from the main sprocket to the back wheel cassette and a new chain to run from the hub motor to the main sprocket. In addition, the pedals are able to function as the original bike did to be able to pedal if need be.

The electronics of the e-bike included the battery, speed controller, throttle, and motor. I assembled 2 battery packs using 18650 lithium-ion cells (7 in series and 8 in parallel) for a combined nominal voltage of 50.4V. I chose this layout in order to balance voltage output and capacity for a quick and long-range bike. To hold the cells in place I designed my own hexagonal-style holders and 3D printed them. I measured the width of the nickel strips and the diameter of the cell to design the holders. In order to assemble the battery packs I used a spot welder and nickel strips to connect the cells in series and parallel, and a soldering iron to add the power leads and battery management system.

I designed and assembled the battery pack holders right behind the handlebar to keep the center of mass near the middle of the bike. I designed the mount on Solidworks by measuring the bike frame thickness and the cases. I used a sandwiching method to bolt the cases to the mount and using hose clamps, the mount was fixed to the frame.

Finally, with all of the final soldering and crimping to connect all of the electronic components, I finished the e-bike.

Results/Takeaways

This project was successful in that this e-bike has lasted for over 3 years now without any issues of breaking down. Through this project, I strengthened a lot of my skills in machining, electronics, and designing which formed my basis in effective engineering early on. My e-bike has also inspired many younger high schoolers to build their own.